[논문] Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

·

Paper Review/Baseline

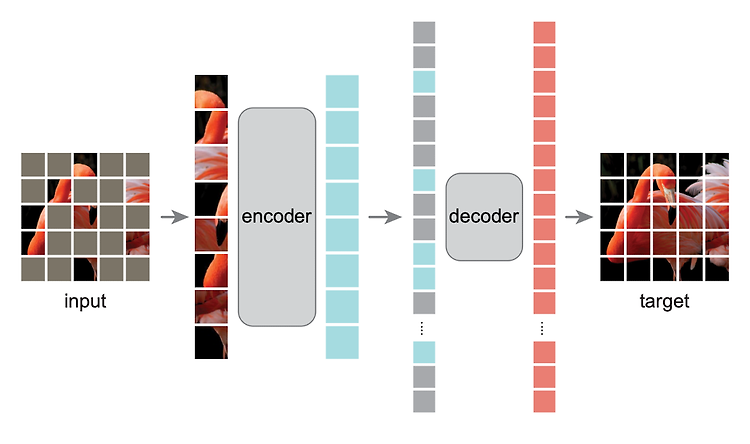

https://arxiv.org/abs/2103.14030 Swin Transformer: Hierarchical Vision Transformer using Shifted WindowsThis paper presents a new vision Transformer, called Swin Transformer, that capably serves as a general-purpose backbone for computer vision. Challenges in adapting Transformer from language to vision arise from differences between the two domains, such asarxiv.org이번 포스팅은 2021 ICCV에 accept된 Sw..